Okay, that's a dumb title. But Zoolander makes me laugh, and it kinda fits. This post is about some books I've read over the last coupla years that really have shaped how I think about statistics, evidence, and science more broadly. It's inspired by some cool recommendations floating around my Twitter feed lately about recommended readings. Nifty.

For background, I went to grad school at UBC. We had a fantastic quantitative program with terrific stats folks like Jeremy Biesanz and Victoria Savalei who did really innovative stuff and taught great courses on stats. But there was a catch: I was a lazy little shit and worked just hard enough to get a decent-but-not-great grade in the 2 required stats classes. I squandered a great opportunity to learn a lot about stats, and I've regretted it since.

Over the last couple of years, though, I've gotten really interested in stats and--with all the free time Assistant Professors have--I decided to try to learn more about stats on my own. These books helped. Let's go.

1. Understanding Psychology as a Science. Zoltan Dienes.

This book is a nice, tidy introduction. It wisely starts out with a gentle introduction to philosophy of science (K-Pop, Kuhn, and Lakatos). This sets the stage for the questions we want to answer as scientists. The remaining three chapters focus on different approaches to statistics. We learn about Neyman and Pearson and the logic of (null) hypothesis testing. Next up is a gentle conceptual introduction to Bayesian stats (focused more on Bayes factors than estimation). Finally, a chapter on likelihood approaches.

This book is an easy read. Full of good ideas. It's approachable, with intuitively compelling examples. And it presents the three statistics families fairly, and in a refreshingly nonjundgmental way. Dienes isn't really pushing any specific approach on the reader, just laying out what they each do. I got a lot out of this book as an Assistant Prof, but it would be great reading for grad students, and I think it would also be valuable for undergrad classes. A+.

Bonus feature: it has cartoons.

2. Statistical Evidence: A Likelihood Paradigm. Richard Royall.

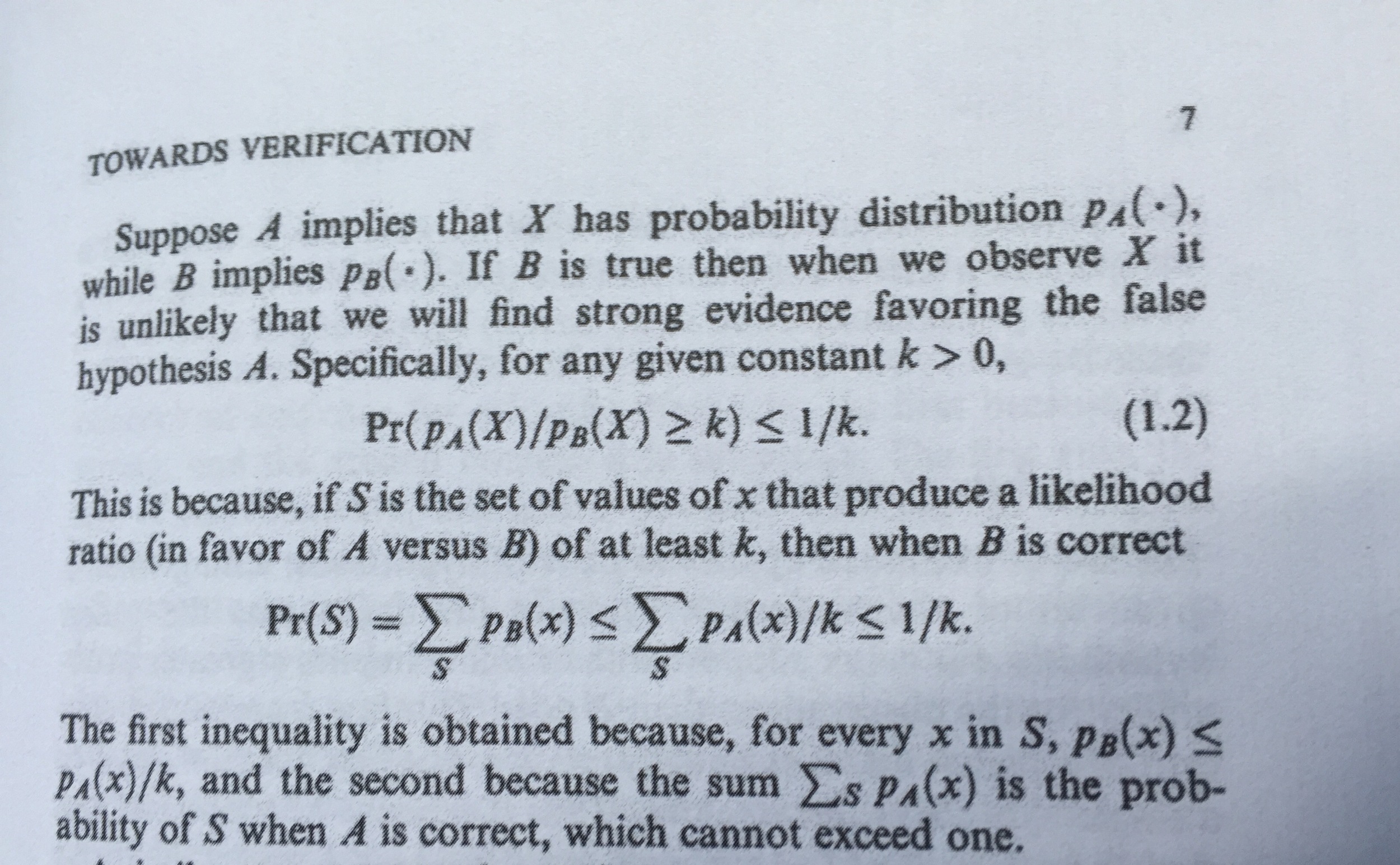

I had never really heard of likelihood approaches until Dienes. I had also read some papers and blogs presenting some of the thought puzzles and dilemmas from Royall, so i was intrigued. I excitedly ordered it. I picked it up, flipped through it, and came across gibberish like this:

I'm told this gibberish is called "equations."

Frightened, intimidated, and a touch ashamed, I put this book down and decided I needed to crawl before walking. Which took me to...

3. An Introduction to Probability and Inductive Logic. Ian Hacking.

The word "Introduction" is right there in the title. That's a good sign. The back of the book also stresses that it's written for a wide range of students, and requires no formal training.

This book walks you through basic concepts in probability. The sort of stuff I'd worked with, but never formalized. It's again super accessible.

It starts with probability basics. Conditional probability. The basic rules of probability. A look at Bayes' Rule. Next it talks about combining probabilities and utilities to make decisions. Then it introduces different theories and definitions of probability (long run frequency versus measures of belief).

Without diving too much into statistics, it also has very conceptual introductions to significance testing, confidence intervals, and Bayesian approaches. There are some gem phrases throughout that I've found useful in brownbags, etc., when people routinely misinterpret p-values or confidence intervals.

A confidence interval has the form: On the basis of our data, we estimate that an unknown quantity lies in an interval. This estimate is made according to a method that is right with probability at least .95. It is completely incorrect to assert anything like this: "The 95% confidence interval for quantity q is I. So the probability that q lies in the interval I is .95."

These statistical principles are tied directly to probability, and the definition of probability one wants to use. And all of that gets tied back in to the process of inductive logic.

This book is written for undergrad philosophy students just being exposed to concepts of probability and inductive logic. I wish I would've read it before taking any statistics courses. With my confidence restored, it was time to turn back to Royall.

2. Statistical Evidence: A Likelihood Paradigm. Richard Royall. Attempt 2.

I decided to dive back in, and not really try to work through all the notation and problems. Turns out that this book is conceptually easy to follow. It has a bunch of nifty thought experiments and dilemmas that are typically about the logic of significance testing and some of its potential shortcomings. It really made me doubt that we should even consider significance tests (and by extension confidence intervals) as measures of evidence. For instance, you'd want an ideal measure of evidence to have a number of properties. The same "value" of evidence should always reflect the same strength of evidence. But p = .04 doesn't always mean the same amount of support for a hypothesis. this is problematic. Ideally, a measure of evidence would be conditional on data at hand, not data that might-have-been-but-weren't collected, or conditional on unknown and unknowable researcher intentions. I'm not sure I 100% buy all of the thought puzzles (there's one on 1- vs 2-tailed tests that i flip flop on whenever I think about it), but they do all force the reader to clarify their thoughts either way.

Towards the end, Royall also mentions some perceived shortcomings of Bayesian approaches (mostly of the form that we don't want subjective priors muddying our inference). But if I had to guess, I'd say that Royall is cooler with Bayesian stats than frequentist stats. But what he really pushes is likelihoods, and the concept that evidence must always be relative. You can't find evidence for a hypothesis in isolation. Instead, you can only describe the relative evidence for a hypothesis in contrast to another hypothesis. Cool stuff.

So, despite my early cowardice with this book, it actually isn't that daunting of a read. It was really good for forcing me to think through the detailed implications of frequentist stats, and for clarifying my thinking about what evidence is more generally. highly recommended.

At this point, I felt like I had a loose grip on the different families of statistics. And I found myself very very curious about Bayesian approaches. I'd played around with Bayes factors a bit, and quantifying evidence for an alternative versus a null seemed like a good idea. But I wanted to learn more.

4. Doing Bayesian Data Analysis. John Kruschke.

Okay, I'm of two minds on this one. I had read Kruschke's "Bayesian Estimation Supersedes the t-test" paper and it piqued my interest. This book looked like it had an applied focus, which was great. I wanted to learn how to actually do some of this shit, to see if it actually improved my analysis.

The positives: Kruschke is a clear (if sometimes wordy) writer, and the early chapters are an excellent conceptual introduction to Bayesian analysis. Chapter 2 is one of the better introductions out there to what Bayesian estimation is all about (allocating credibility across the range of potential parameter values, conditional on your data). Basically, this made me really "get" that Bayesian estimation is essentially asking "how probable is it that different states of the world could have generated the data in front of me." This is a valuable question to ask, in my opinion. There's also a gem of a chapter on how sampling intentions can mess with frequentist stats (how in the hell could an effect be significant if i thought one thing before the study, but not significant if I thought something else? He makes this clear).

So, why two minds? Kruschke put a ton of work into generating R functions that could be used to analyze different types of data. I could run them. I could get answers. But I often didn't quite grasp what I was doing, or why. And most of the blame is probably on me, a middling ability self-taught R user. Overall, I found the provided functions okay, but not super easy to use. And when I tried to apply them to my own data, I had a hard time altering and customizing some of the analyses or changing priors in an intuitive way.

So, conceptually this book was very helpful. But I tend not to use the actual functions in my own analyses. Which brings us to the final entrant...

5. Statistical Rethinking. Richard McElreath.

This. Book. Is. Awesome.

From the opening chapter, I was hooked. McElreath introduces the story of the Golem of Prague as an analogy to statistical analysis. Golems were evidently mythological critters made of clay that were basically infinitely powerful and infinitely stupid. They would do exactly what you asked them to do. But if your orders were just a little off, the results are catastrophic. McElreath says a lot of stats are like this. A t-test is a golem. Regression is a golem. Ask your stats software to do something, and it'll fucking do it. But you might be getting an answer to a poorly asked question. So you might need to just have an algorithm for picking the right golem, depending on what your data look like. Like this:

There, so statistics is like a recipe that you can mindlessly follow from beginning to end. And I probably still have a flowchart like that floating around my office somewhere. But McElreath urges the reader to step back, rethink things, and then try to build and compare models from the ground up.

The early chapters are super gentle and hands-on introductions to Bayesian thinking. He stresses that the key concepts of the book needn't be Bayesian. But he presents the Bayesian framework because he thinks that Bayesian probability statements are a better fit for our intuitions about what we want from statistics. Many would disagree, but I am persuaded by this argument. Outputs of Bayesian estimation make more intuitive sense to me, for whatever reason.

Next up is very gentle model building with basic regression. Then interactions. Then eventually information theory basics, multilevel modeling, and fun things like imputation of missing data.

McElreath wrote an R package for the book. And there's R code on just about every page. This makes it super easy to apply the modeling techniques. Better yet, he makes it super easy to modify and adapt the models to your own analyses. My last two paper submissions have exclusively used the rethinking package for analyses.

And, to illustrate just how user-friendly, pragmatic, and applied this book is, I have no formal training in either multilevel modeling or Bayesian analysis. But my last submitted paper's focal analysis is a Bayesian multilevel model. From zero to hero, just like that!

I think some previous experience with R would be very beneficial for readers of this book. And I find I don't use all of the functions McElreath's package includes. But with some R basics, this book, and the rethinking package, it's pretty easy to get your data, build a model, extract samples from a posterior, and make intuitive and useful probability statements from that data. What's the posterior probability that the treatment had a positive effect? No problem, you can calculate that very quickly. Want to see how different priors affect your model? Super easy. Want to see how some mildly regularizing priors can reduce model overfitting? Yeah, that's easy too.

I found this book super easy to understand. Granted, that could be because I read it after reading all the others. But I think it would be fairly easy to jump into this one on its own. Personally, I found it to be a gamechanger.

EDIT: Might as well attempt a ranking.

If you only read one, pick Dienes.

If you only read one and want to do analyses now, do McElreath

If you read 2 and are about to teach a stats class, Dienes + Hacking

If you read 2 and want to just start doing more analyses, do Dienes + McElreath

If you read 3 and want to really think about this stuff conceptually, do Dienes + Hacking + Royall

If you want to pick up Bayesian stats reasonably well, go McElreath then Kruschke

If you're mad at one of your grad students, just give them Royall and tell them there'll be a quiz on it in a week.